Using fNIRS to study emotions in virtual reality environments

Have you ever watched a horror movie or read a really gripping thriller that made you skip a beat or two at the climax? If you know what I’m talking about, then we can agree on something: our minds are connected to our bodies in such a way that the emotions we experience cause physiological changes on us. But what are emotions? According to Deak [1], “emotions are subjective states that modulate and guide behavior as a collection of biological, social, and cognitive components”. Emotions play an important role in human rationality and influence the way we act and respond to different situations; operating both on a conscious and subconscious level and preparing our body to face different situations.

How can we elicit emotions?

It is amazing how our emotional state may change because of an indirect or fictive situation like a song, a book or a movie. Indeed, audio-visual (music, sounds, pictures, videos) and narrative stimuli have been widely used to elicit emotion, inducing different levels of valence and arousal [2]. This is because, by means of presence cues in these stimuli, our mind can make us feel as if we are present in a particular scenario.

So, what if we could make use of all these stimuli and fuse them into an environment where the sense of presence might be enhanced? We can do this by means of virtual reality (VR). Virtual reality allows us to simulate real-life scenarios in which the subject can freely interact within a dynamic environment, while still controlling both the situations and stimuli involved [3,4].

How can we measure emotions?

The interconnection between our mind and body is explained by the existing link between the central (CNS) and autonomic nervous systems (ANS). While human emotions originate in our brain, involving several areas in their regulation and feeling, they involve distinct physiological responses [3], such as changes in heart and respiratory rates. These responses originate in the ANS, which is in turn regulated by the CNS. It seems reasonable, then, to study emotions and their effect on humans by combining measurements of CNS and ANS activities.

While the options to measure ANS activity are straightforward – like heart or respiratory rates, galvanic skin response, blood pressure – the many options to measure CNS activity have different advantages and disadvantages that have to be taken into account. fMRI is the most frequently used technique to study brain activation patterns under cognitive tasks. However, the severe limitations in participant movement, huge machinery dimensions, and loud disturbing noise reduce the immersion within the experimental environment [3].

Figure 1: Combined fNIRS-Virtual Reality system

Could we, instead, use Near Infrared Spectroscopy (NIRS)?

Indeed, NIRS is a much better alternative. It is a non-invasive technique that, based on oxy and deoxy-hemoglobin distinct absorption spectra in the near-infrared region (650 - 950 nm), measures changes in tissue hemodynamics (blood perfusion) [5]. Functional near infrared spectroscopy (fNIRS) is a neuroimaging technique that uses NIRS to detect brain functional activation. It is based on a mechanism known as neurovascular coupling, in which an increase in oxygen consumption secondary to cortical neuronal activation is followed by an increase in cerebral blood flow. This change in blood perfusion leads to local changes in oxy and deoxy-hemoglobin concentrations, which allows us to identify the cortical brain areas activated in response to specific stimuli [6-8]. In contrast to fMRI, multi-channel fNIRS devices like the Brite and OctaMon are wearable and have low susceptibility to motion artifacts, thereby allowing the subject to freely move and explore the environment while still allowing the researcher to localize the activation of different cortical brain areas reliably with high precision and good spatial resolution (~10 mm) [6]. This enables us to conduct research on paradigms mimicking real-life situations rather than artificial lab settings.

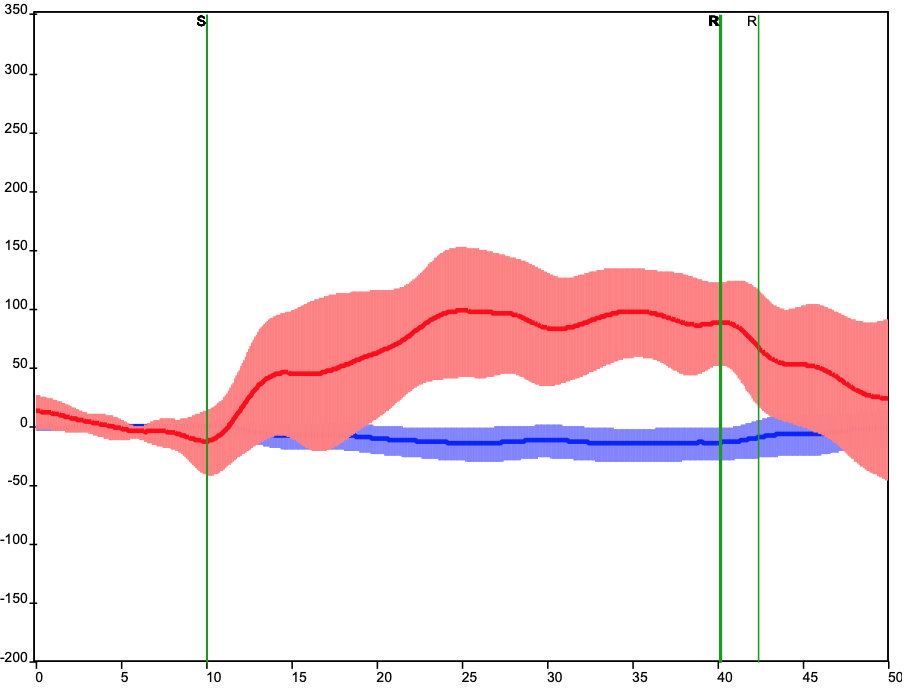

Figure 2: Changes in oxy-hemoglobin (red) and deoxy-hemoglobin (blue) concentrations during brain activation. The plot shows the averaged responses and standard deviation over several trials.

What is more… what if we could also use fNIRS to estimate the physiological responses mediated by the ANS? If you’re familiar with this technology, you might have read about the “physiological noise” in fNIRS signals and how to filter it out, but… what if we used it instead? It would mean that we could evaluate CNS and ANS interplay with fewer devices, while benefiting from a more realistic setting.

My name is María Sofía Sappia, MSc. As an Early Stage Researcher in the RHUMBO Marie Sklodowska-Curie ITN project, I will be conducting multimodal experiments within virtual reality environments to address these questions. I will combine narrative with spatial and audio-visual cues within these environments to elicit specific emotions and analyze the effects in both central and autonomic nervous systems. As a result, I expect to come up with a model of human emotions that identifies a subject’s emotional state at a given moment.

The RHUMBO project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 813234.

This blog post reflects only the author’s view and the Agency and the Commission are not responsible for any use that may be made of the information it contains.

References

[1] A. Deak, “Brain and emotion: Cognitive neuroscience of emotions”, Review of psychology,vol. 18, no. 2, pp. 71–80, 2011.

[2] J. Marın-Morales, J. L. Higuera-Trujillo, A. Greco, J. Guixeres, C. Llinares, E. P. Scilingo,M. Alcañiz, and G. Valenza, “Affective computing in virtual reality: Emotion recognitionfrom brain and heartbeat dynamics using wearable sensors”, Scientific reports, vol. 8, no. 1,p. 13 657, 2018.

[3] B. Seraglia, L. Gamberini, K. Priftis, P. Scatturin, M. Martinelli, and S. Cutini, “An exploratory fnirs study with immersive virtual reality: A new method for technical implementation”, Frontiers in human neuroscience, vol. 5, p. 176, 2011.

[4] D. Colombo, J. Fernández-Álvarez, A. G. Palacios, P. Cipresso, C. Botella, and G. Riva,“New technologies for the understanding, assessment, and intervention of emotion regula-tion”, 2019.

[5] S. Tak and J. C. Ye, “Statistical analysis of fnirs data: A comprehensive review”, Neuroimage,vol. 85, pp. 72–91, 2014.