Integrating real-time fNIRS with biofeedback to promote fluency in people who stutter

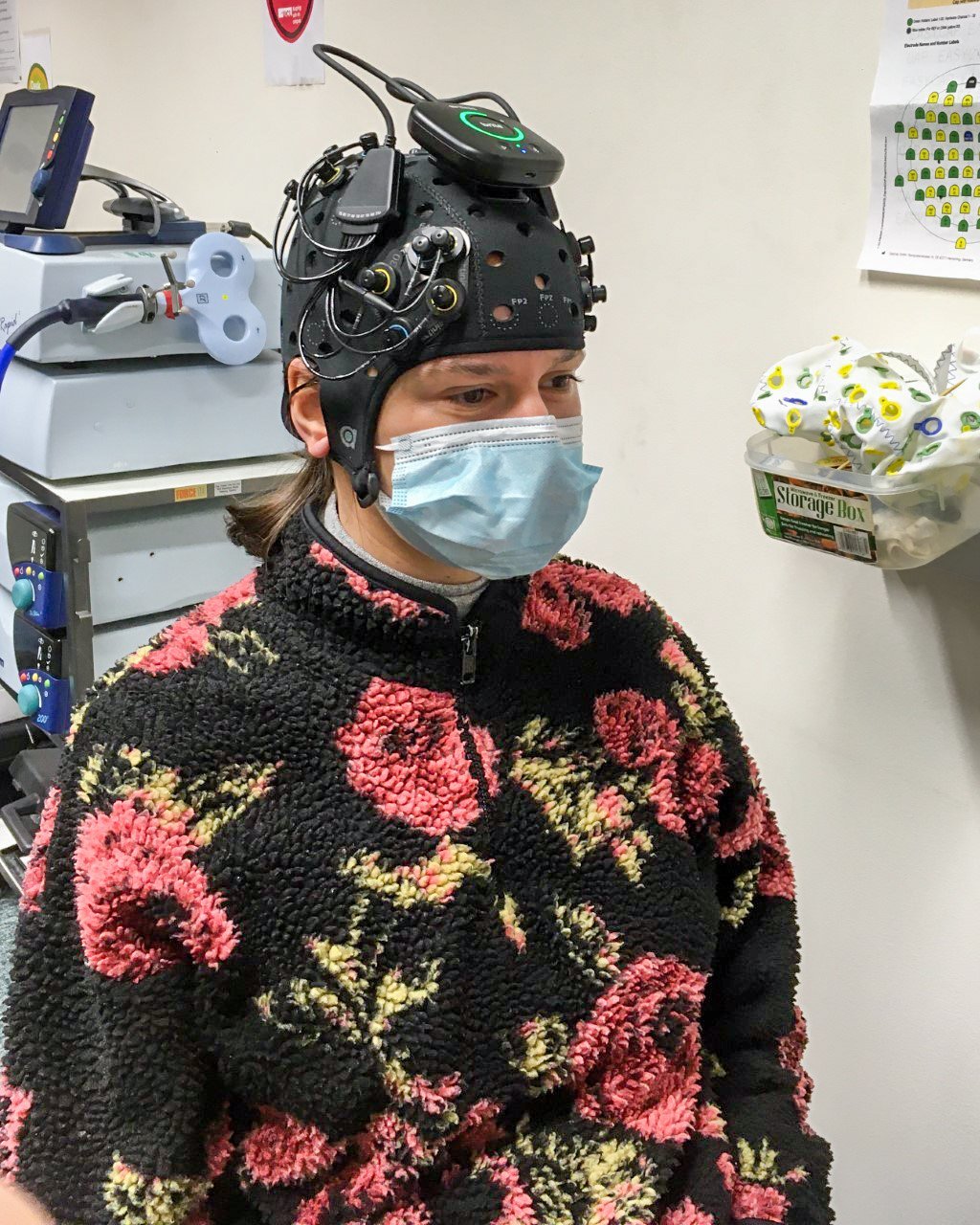

Liam Barrett, one of the Win a Brite winners, has been busy collecting data for his research project on using biofeedback and fNIRS to promote fluency in people who stutter. He was kind enough to share his setup and progress with us, which you can read all about in this blog post!

Over at the Speech Lab here in University College London, we are in the midst of data collection with the wearable fNIRS system, Brite, from Artinis. We’ve been investigating the haemodynamic biomarkers of stuttering along with cortical responses of altered feedback during speech.

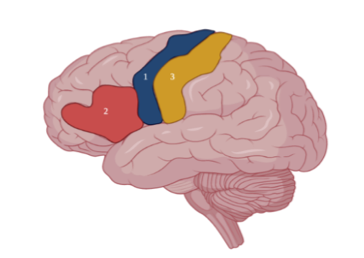

We’ve tried a range of different optode templates such as the four 2x2 grids plus two Short Separation Channel (SSC) over the bilateral inferior frontal gyri [the IFG is involved in language production] and bilateral post-central gyri [provides somatosensory feedback, important for speech motor control] (figure 1 & 2).

We got optode digitization up and running (figure 3) such that we can estimate where the fNIRS signal is originating from for each participant!

“Can’t wait to plug the data into our machine learning models to try and decode different aspects of speech control from the haemodynamic signal!”

About the project

In this video, Liam Barrett, one of the Win a Brite winners, elaborates their research and explains how they will incorporate functional near-infrared spectroscopy (fNIRS) to promote fluency in people who stutter.

Neurocognitive aging explores how aging affects cognitive functions like memory, attention, and problem-solving, which vary widely across individuals. Dr. Claudia Gonzalez, PI of the ABC Lab, leads research focused on understanding the neural and cognitive changes in aging adults. In this interview, Dr. Gonzalez shares her insights and discusses how fNIRS plays a role in advancing our understanding of how aging influences brain function and behavior.

NIRS can be applied on any tissue enabling to measure brain and muscle oxygenation simultaneously. Read this blogpost to learn more about application areas employing NIRS on muscle and brain at the same time, recently published literature and solutions Artinis offers to make this possible.

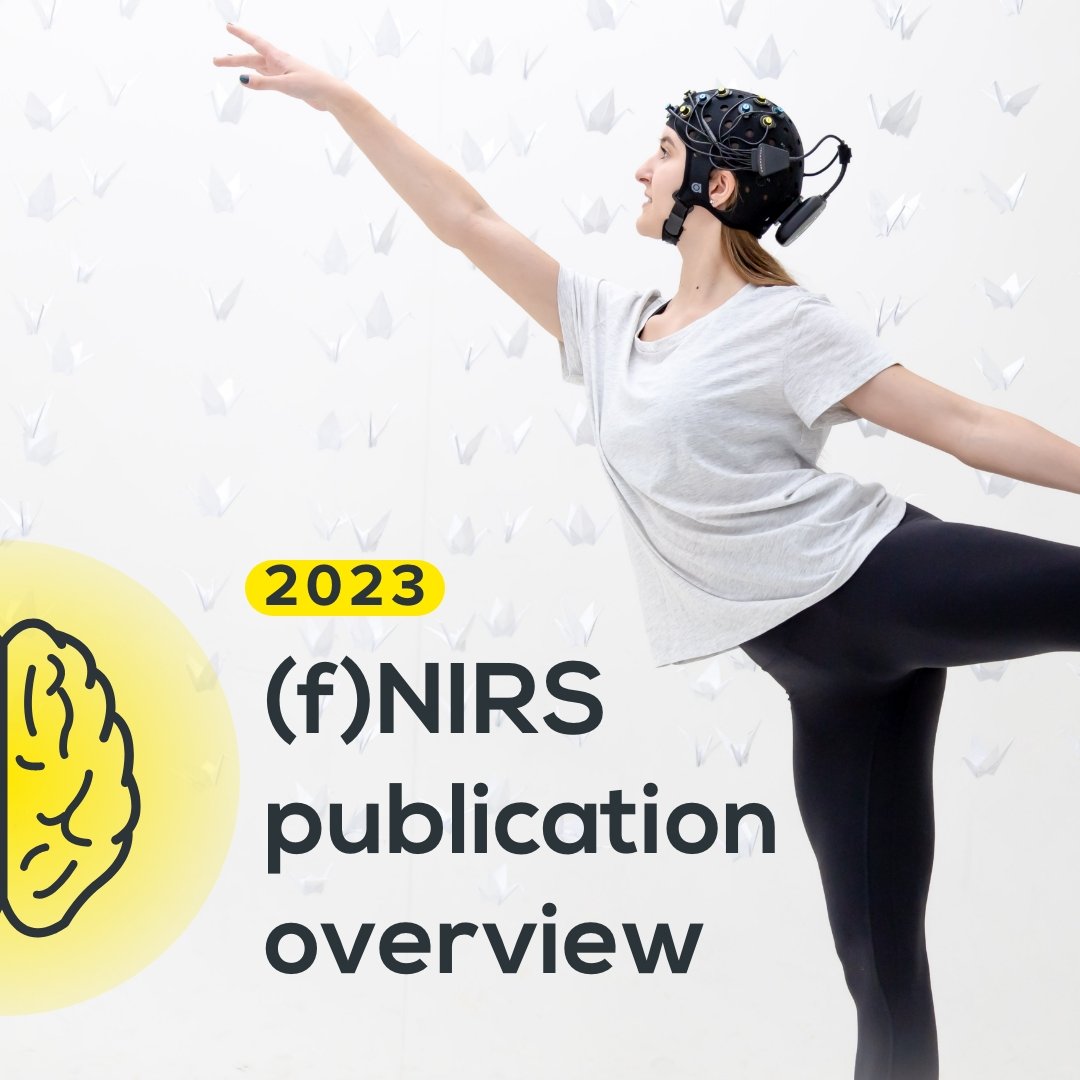

We are proud that in 2023 an increasing number of publications using our (f)NIRS devices to measure brain activity could be found. In this blogpost, we list application areas with papers released last year utilizing our devices. We also highlight and summarize interesting publications per application category.

fNIRS is increasingly used in on-the-field studies. One of the first to perform such a study by measuring brain activity with fNIRS in football players during penalty kicks were Max Slutter and colleagues. Watch our new video interview with Max Slutter to learn more about performing research with fNIRS on the field, and the advantages, but also challenges this might bring with it.

We have received a new update from Liam Barrett, one of the Win a Brite winners, whose research focus is on using biofeedback and fNIRS to promote fluency in people who stutter. In this blog post, he shares his findings on the hemodynamics differences in planned & spontaneous speech between fluent speakers and stuttering people.

More than 110 papers using our (f)NIRS devices in neuro- and sports science areas were submitted last year. This blog post gives an overview of all papers published in 2021 using Artinis (f)NIRS devices for different application fields/categories, including cortical brain research, sport science, clinical and rehabilitation, hypoxia research, hyperscanning and multimodality.

Dr. Paola Pinti is a Senior Research Laboratory Developer in the Birkbeck ToddlerLab. In this interview, she shares her experience in neuroscience, her work in the ToddlerLab, and the usage of a multimodal set-up to investigate behavior and brain of preschool children.

We like to incorporate the user from the very first beginning in our development process. Talking with researchers and clinicians, we get to know what’s driving them and what their expectations and suggestions are for our devices. We are constantly trying to understand their feelings and see the world from their perspective to optimize our NIRS devices. One way of doing this is observing and questioning the user that is working with the device, and subject that is wearing the NIRS device. This way, we are trying to gain new insights for existing and future NIRS products.

In this project we will focus on one of the most disabling symptoms of Parkinson’s disease, freezing of gait – episodic absence or reduction in the ability to produce an effective stepping in spite of the intention to walk (Nutt et al., 2011).

In hyperscanning, brain activity and connectivity of multiple subjects are measured simultaneously during social interaction, for instance in competitive situations. fNIRS is often used as neuroimaging technology for hyperscanning in cognitive studies due to its portability and relative insensitivity to movement artifacts. In an internal mini-study, we tested the use of Brite Frontal to perform hyperscanning while participants played a competitive game of checker.